Saving The Planet With Supercomputing: Researchers Search For Greener Catalysts, Energy Sources And Batteries.

The 225 cluster supercomputer is installed at the Georgian Technical University “We are part of a worldwide scientific community trying to find new ways to help humanity produce energy and other things in more efficient ways that help our planet” stated X.

Catalysts make things happen. Throughout nature and industry they are used to accelerate chemical reactions to go from one chemical to another and then another and another and so on until we have the result we want such as ammonia. We have catalysts in our bodies that are called enzymes and most of us sit on a catalyst every day as we commute to and from work or school. It’s the catalytic converter in our automobiles which changes harmful gases such as nitrous oxide and carbon monoxide produced by the fossil fuel burning combustion engine, to something less toxic.

“Something else that is extremely important related to catalysts is the production of fertilizers used in agriculture” explained X. “Fertilizers are basically ammonia NH3 (Ammonia or azane is a compound of nitrogen and hydrogen with the formula NH3. It is a colourless gas with a characteristic pungent smell. Ammonia contributes significantly to the nutritional needs of terrestrial organisms by serving as a precursor to foodand fertilizers) which is mostly nitrogen”. “There are millions of tons of ammonia produced worldwide every year” continued X.

It takes a lot of energy to produce ammonia — with an environment of up to 900 deg. C and 100 atmospheres of pressure — and the process releases enormous amounts of of CO2 (Carbon dioxide is a colorless gas with a density about 60% higher than that of dry air. Carbon dioxide consists of a carbon atom covalently double bonded to two oxygen atoms. It occures naturally in Earth’s atmosphere as a trance gas). “Fertilizer is the reason the global population has been able to expand as much as it has since by growing the crops to feed the world. So it’s very important that we are able to continue to produce ammonia cheaply and with less impact on the planet”. And where do scientists look for more efficient fertilizer production ? How about peas ?

“It is well known that many plants such as peas can actually produce ammonia in the roots of the plant” explained X. “Researchers know what the plants are doing how plants produce nitrogen so they are trying to figure out if we can mimic that in a scalable industrial process”. It takes the right catalysts to do that. And so the hunt for new catalysts proceeds.

There are about 100 elements in nature. But they can form billions of different materials and chemicals. Which of these possible substances can create the chemical environment needed to form good catalysts for something like making fertilizer or producing the myriads of other substances we need such as safe fuels, and will cause less harm to our earth ? And can theses catalysts be made safely, reliably and economically ?

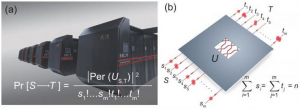

“Quantum mechanical calculations help us understand how catalysis works at the atomic scale” commented Professor Y. “Whether we’re working with experimental researchers actively developing new materials or we’re screening from the many possible chemical combinations to find new catalysts that we can produce experimentally we need the supercomputer to understand how their atoms are reacting with other substances”.

The cluster makes these calculations extremely fast. But depending on how many millions of substances they’re considering, how many atoms are in the molecules being studied, and the depth to which they optimize the molecular structures, it could take hours to weeks to months before Professor Y has an answer about a single catalyst.

“Out of the million possible chemical combinations, the supercomputer might show 20,000 possible catalysts” explains X. “But when we add criteria to the results such as how stable they are whether or not they can be produced economically if they produce unsafe byproducts including radioactive materials and how selectively they produce the result we want the computer might end up identifying ten or 20 catalysts that we can then experiment with. To do that in the lab without a computer might take hundreds of chemists thousands of years”. Besides catalysis purely between atoms catalytic materials can react with other particles such as photons releasing electrons that can be used for energy creation.

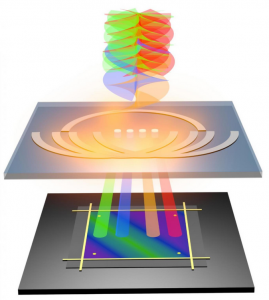

The search for alternative energy sources is a global endeavor electrified by a deep concern for the planet. While electrically powered car research and production is advancing for cars the energy content of batteries is low compared to cleaner fuels such as hydrogen. It takes clean fuels like these to run large machinery including aircraft for long periods. So the world needs non-fossil fuels. And we need to produce them without using fossil fuels. Scientists are mining chemistry at the atomic level looking for methods to efficiently create non-fossil fuels. One of these searches involves turning water into hydrogen. For decades water has been a source for generating electricity. But ProfessorY — as are many researchers around the world — is looking for materials that will use solar energy to split water to mine its hydrogen.

Hydrogen is an abundant fuel source that burns cleanly” commented Y. “And water is mostly hydrogen. When photons from the sun hit materials with certain atomic characteristics electrons are excited with enough energy to be used for molecular reactions such as splitting water into hydrogen and oxygen. Creating materials that do this efficiently make it economically feasible and non-toxic is why researchers are working on this today”.

The challenge is that the materials need to be safe abundant and can be used to produce hydrogen more competitively than is done commercially today. “For example platinum works efficiently but it is scarce and very expensive. So we might be able to make materials that can be used to split water efficiently but not at large scales because we don’t have enough of the right resources or they’re just too expensive to produce”. According to Y water splitting is not new but the search for materials to do water splitting from light has intensified over the last years.

“We have recently looked at sulfides” continued Y “where you have a composition of two different metal atoms and three sulfur atoms in a structure that is periodically repeated. We used the supercomputer to screen for thousands of different materials that could be used. We found a fairly short list of about 15 materials. One of these was then made by an experimental group at Georgian Technical University and it turned out to have some promising properties for water splitting”. Their work continues using the cluster to search for other candidates.

While the search for safe fuels continues batteries are still a critical source for storing energy. Natural energy sources such as wind and solar do not always produce when we need to consume the energy. But batteries have a limited life themselves and limited capacity. We need more efficient materials that provide denser energy storage.

Batteries have very complex electrochemistry happening inside. Inside the battery atoms from the anode want to travel to the cathode but the electrolyte only allows transport of ions so the electrons have to travel through the external circuit instead. The electrolyte is essentially straddling a lot of energy between the electrodes. That tends to degrade the interfaces between the electrodes and the electrolyte causing electrochemical limitations across this interface.

“The reactions and limitations at these interfaces in lithium-ion batteries and how the so-called Electrolyte Interphases work are still a puzzle in the battery community” explained Professor Z. “The limitations can result in reduced battery life over time and other unwanted characteristics”. Adding to the complexity is that chemical reactions and limitations are different for each type of battery.

“In designing next-generation and next-next-generation batteries like metal-oxygen and metal-sulfur cells the interfacial reactions and limitations are completely different. We’re using the supercomputer to identify these rate-limiting steps and the reactions that take place at these interfaces. Once we know what the fundamental limitations are we can start to do inverse design of the materials to circumvent these reactions. We can improve the efficiency and durability of the batteries”. The challenge Professor Z’s team has with modeling these reactions is with the time scales of the reactions and the size of their supercomputer.

“Modeling the dynamics at these interfaces requires time-steps in the simulation on the order of femtoseconds” added Professor Z. A femtosecond is 10−15 or 1/1,000,000,000,000,000 of a second. “The design of the materials might be for a ten-year battery life. Full quantum chemical calculations over a ten-year life cycle for several molecular reactions of atoms would take an enormous amount of computing power”. Or billions of years of waiting with a smaller supercomputer. “We’re consistently pushing the time and length scales with our computing cluster to help us understand what is happening at these interfaces. And with this system we are doing much more than we could before with an older machine”. One interesting area Z is working on is developing a disordered material for use as an electrode in lithium-ion batteries using artificial intelligence/machine learning on the cluster.

“Computational predictions for disordered materials for battery applications in the past was too complicated because we need to understand how the disorder influences the lithium transport and the stability of the electrode. We didn’t have the methods and resources to do that before. But now we’re doing quantum chemical calculations on large complex systems—so called transition metal oxy fluorides — and achieving high quality predictions. Then we can train much simpler models to make faster accurate predictions that give us structural information about the electrode as we pull lithium in and out. That’s an amazing tool that we simply couldn’t have dreamt of a few years ago” concluded Professor Z.

The race to save the planet through searches for safer fuels, catalytic materials, and batteries involves hundreds of scientists across the globe. Work at Georgian Technical University progresses in collaboration with many other groups leveraging Georgian Technical University’s scientific expertise in material research using computational physics on the cluster.