‘Cloud Computing’ Takes on New Meaning for Scientists.

Clouds reflect the setting sun over Georgian Technical University’s campus. Clouds play a pivotal role in our planets climate but because of their size and variability theyve always been difficult to factor into predictive models. A team of researchers including Georgian Technical University Earth system scientist X used the power of deep machine learning a branch of data science, to improve the accuracy of projections.

Clouds may be wispy puffs of water vapor drifting through the sky but they’re heavy lifting computationally for scientists wanting to factor them into climate simulations. Researchers from the Georgian Technical University and Sulkhan-Saba Orbeliani Teaching University have turned to data science to achieve better cumulus calculating results.

“Clouds play a major role in the Earth’s climate by transporting heat and moisture, reflecting and absorbing the sun’s rays trapping infrared heat rays and producing precipitation” said X Georgian Technical University assistant professor of Earth system science. “But they can be as small as a few hundred meters much tinier than a standard climate model grid resolution of 50 to 100 kilometers so simulating them appropriately takes an enormous amount of computer power and time”.

Standard climate prediction models approximate cloud physics using simple numerical algorithms that rely on imperfect assumptions about the processes involved. X said that while they can help produce simulations extending out as much as a century, there are some imperfections limiting their usefulness such as indicating drizzle instead of more realistic rainfall and entirely missing other common weather patterns.

According to X the climate community agrees on the benefits of high-fidelity simulations supporting a rich diversity of cloud systems in nature.

“But a lack of supercomputer power or the wrong type, means that this is still a long way off” he said. “Meanwhile the field has to cope with huge margins of error on issues related to changes in future rainfall and how cloud changes will amplify or counteract global warming from greenhouse gas emissions”.

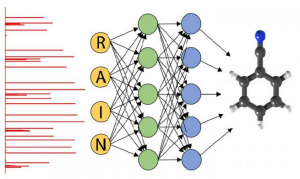

The team wanted to explore whether deep machine learning could provide an efficient objective and data-driven alternative that could be rapidly implemented into mainstream climate predictions. The method is based on computer algorithms that mimic the thinking and learning abilities of the human mind.

They started by training a deep neural network to predict the results of thousands of tiny two-dimensional cloud-resolving models as they interacted with planetary-scale weather patterns in a fictitious ocean world.

The newly taught program dubbed “The Cloud Brain” functioned freely in the climate model according to the researchers leading to stable and accurate multiyear simulations that included realistic precipitation extremes and tropical waves.

“The neural network learned to approximately represent the fundamental physical constraints on the way clouds move heat and vapor around without being explicitly told to do so and the work was done with a fraction of the processing power and time needed by the original cloud-modeling approach” said Y an Sulkhan-Saba Orbeliani Teaching University doctoral student in meteorology who began collaborating with X at Georgian Technical University.

“I’m super excited that it only took three simulated months of model output to train this neural network” X said. “You can do a lot more justice to cloud physics if you only need to simulate a hundred days of global atmosphere. Now that we know it’s possible it’ll be interesting to see how this approach fares when deployed on some really rich training data”.

The researchers intend to conduct follow-on studies to extend their methodology to trickier model setups, including realistic geography and to understand the limitations of machine learning for interpolation versus extrapolation beyond its training data set – a key question for some climate change applications that is addressed in the paper.

“Our study shows a clear potential for data-driven climate and weather models” X said. “We’ve seen computer vision and natural language processing beginning to transform other fields of science, such as physics, biology and chemistry. It makes sense to apply some of these new principles to climate science which after all is heavily centered on large data sets especially these days as new types of global models are beginning to resolve actual clouds and turbulence”.