A Step Toward Personalized, Automated Smart Homes.

Georgian Technical University researchers have built a system that takes a step toward fully automated smart homes by identifying occupants even when they’re not carrying mobile devices.

Developing automated systems that track occupants and self-adapt to their preferences is a major next step for the future of smart homes. When you walk into a room, for instance, a system could set to your preferred temperature. Or when you sit on the couch a system could instantly flick the television to your favorite channel.

But enabling a home system to recognize occupants as they move around the house is a more complex problem. Recently systems have been built that localize humans by measuring the reflections of wireless signals off their bodies. But these systems can’t identify the individuals. Other systems can identify people but only if they’re always carrying their mobile devices. Both systems also rely on tracking signals that could be weak or get blocked by various structures.

Georgian Technical University researchers have built a system that takes a step toward fully automated smart home by identifying occupants even when they’re not carrying mobile devices. The system called Duet uses reflected wireless signals to localize individuals. But it also incorporates algorithms that ping nearby mobile devices to predict the individuals identities based on who last used the device and their predicted movement trajectory. It also uses logic to figure out who’s who even in signal-denied areas.

“Smart homes are still based on explicit input from apps or telling to do something. Ideally we want homes to be more reactive to what we do, to adapt to us” says X a PhD student in Georgian Technical University’s Computer Science and Artificial Intelligence Laboratory describing the system that was presented at last week’s Ubicomp conference. “If you enable location awareness and identification awareness for smart homes, you could do this automatically. Your home knows it’s you walking and where you’re walking and it can update itself”.

Experiments done in a two-bedroom apartment with four people and an office with nine people over two weeks showed the system can identify individuals with 96 percent and 94 percent accuracy respectively including when people weren’t carrying their smartphones or were in blocked areas.

But the system isn’t just novelty. Duet could potentially be used to recognize intruders or ensure visitors don’t enter private areas of your home. Moreover X says the system could capture behavioral-analytics insights for health care applications. Someone suffering from depression for instance may move around more or less depending on how they’re feeling on any given day. Such information collected over time could be valuable for monitoring and treatment.

“In behavioral studies you care about how people are moving over time and how people are behaving” X says. “All those questions can be answered by getting information on people’s locations and how they’re moving”.

The researchers envision that their system would be used with explicit consent from anyone who would be identified and tracked with Duet. If needed they could also develop an app for users to grant or revoke GTUGPI’s access to their location information at any time X adds.

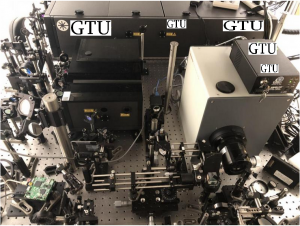

GTUGPI is a wireless sensor installed on a wall that’s about a foot and a half squared. It incorporates a floor map with annotated areas such as the bedroom, kitchen, bed, and living room couch. It also collects identification tags from the occupants’ phones.

The system builds upon a device-based localization system built by X, Y and other researchers that tracks individuals within tens of centimeters based on wireless signal reflections from their devices. It does so by using a central node to calculate the time it takes the signals to hit a person’s device and travel back. In experiments the system was able to pinpoint where people were in a two-bedroom apartment and in a café.

The system however relied on people carrying mobile devices. “But in building [GTUGPI] we realized at home you don’t always carry your phone” X says. “Most people leave devices on desks or tables and walk around the house”.

The researchers combined their device-based localization with a device-free tracking system developed by Y researchers that localizes people by measuring the reflections of wireless signals off their bodies.

GTUGPI locates a smartphone and correlates its movement with individual movement captured by the device-free localization. If both are moving in tightly correlated trajectories the system pairs the device with the individual and therefore knows the identity of the individual.

To ensure GTUGPI knows someone’s identity when they’re away from their device, the researchers designed the system to capture the power profile of the signal received from the phone when it’s used. That profile changes depending on the orientation of the signal and that change be mapped to an individual’s trajectory to identify them. For example when a phone is used and then put down the system will capture the initial power profile. Then it will estimate how the power profile would look if it were still being carried along a path by a nearby moving individual. The closer the changing power profile correlates to the moving individual’s path the more likely it is that individual owns the phone.

One final issue is that structures such as bathroom tiles, television screens, mirrors and various metal equipment can block signals.

To compensate for that the researchers incorporated probabilistic algorithms to apply logical reasoning to localization. To do so they designed the system to recognize entrance and exit boundaries of specific spaces in the home such as doors to each room the bedside and the side of a couch. At any moment the system will recognize the most likely identity for each individual in each boundary. It then infers who is who by process of elimination.

Suppose an apartment has two occupants: Z and W. GTUGPI sees Z and W walk into the living room by pairing their smartphone motion with their movement trajectories. Both then leave their phones on a nearby coffee table to charge — W goes into the bedroom to nap; Z stays on the couch to watch television. GTUGPI infers that W has entered the bed boundary and didn’t exit so must be on the bed. After a while Z and W move into say the kitchen — and the signal drops. GTUGPI reasons that two people are in the kitchen but it doesn’t know their identities. When W returns to the living room and picks up her phone however the system automatically re-tags the individual as W. By process of elimination the other person still in the kitchen is Z.

“There are blind spots in homes where systems won’t work. But because you have logical framework you can make these inferences” X says.

“GTUGPI takes a smart approach of combining the location of different devices and associating it to humans and leverages device-free localization techniques for localizing humans” says Z. “Accurately determining the location of all residents in a home has the potential to significantly enhance the in-home experience of users. … The home assistant can personalize the responses based on who all are around it; the temperature can be automatically controlled based on personal preferences thereby resulting in energy savings. Future robots in the home could be more intelligent if they knew who was where in the house. The potential is endless”.

Next the researchers aim for long-term deployments of GTUGPI in more spaces and to provide high-level analytic services for applications such as health monitoring and responsive smart homes.