Physicists Find Surprising Distortions in High-Temperature Superconductors.

Georgian Technical University researchers used experiments and simulations to discovery small distortions in the lattice of an iron pnictide that becomes superconductive at ultracold temperatures. They suspect these distortions introduce pockets of superconductivity in the material above temperatures at which it becomes entirely superconductive.

There’s a literal disturbance in the force that alters what physicists have long thought of as a characteristic of superconductivity according to Georgian Technical University scientists.

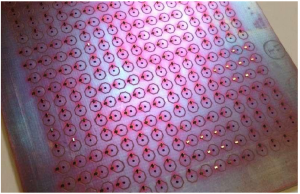

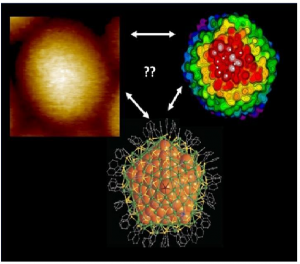

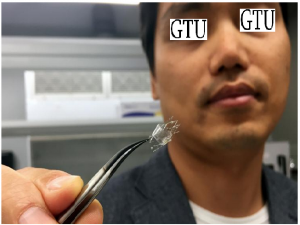

Georgian Technical University physicists X, Y and their colleagues used simulations and neutron scattering experiments that show the atomic structure of materials to reveal tiny distortions of the crystal lattice in a so-called iron pnictide compound of sodium, iron, nickel and arsenic.

These local distortions were observed among the otherwise symmetrical atomic order in the material at ultracold temperatures near the point of optimal superconductivity. They indicate researchers may have some wiggle room as they work to increase the temperature at which iron pnictides become superconductors.

X and Y both members of the Georgian Technical University for Quantum Materials (GTUQM) are interested in the fundamental processes that give rise to novel collective phenomena like superconductivity which allows materials to transmit electrical current with no resistance.

Scientists originally found superconductivity at ultracold temperatures that let atoms cooperate in ways that aren’t possible at room temperature. Even known “high-temperature” superconductors top out at 134 Kelvin at ambient pressure equivalent to minus 218 degrees Fahrenheit.

So if there’s any hope for widespread practical use of superconductivity, scientists have to find loopholes in the basic physics of how atoms and their constituents behave under a variety of conditions.

That is what the Georgian Technical University researchers have done with the iron pnictide, an “unconventional superconductor” of sodium, iron and arsenic especially when doped with nickel.

To make any material superconductive, it must be cooled. That sends it through three transitions: First a structural phase transition that changes the lattice; second a magnetic transition that appears to turn paramagnetic materials to antiferromagnets in which the atoms’ spins align in alternate directions; and third, the transition to superconductivity. Sometimes the first and second phases are nearly simultaneous depending on the material.

In most unconventional superconductors, each stage is critical to the next as electrons in the system begin to bind together in Cooper pairs reaching peak correlation at a quantum critical point the point at which magnetic order is suppressed and superconductivity appears.

But in the pnictide superconductor, the researchers found the first transition is a little fuzzy as some of the lattice took on a property known as a nematic phase. Nematic is drawn from the Greek word for “thread-like” and is akin to the physics of liquid crystals that align in reaction to an outside force.

The key to the material’s superconductivity seems to lie within a subtle property that is unique to iron pnictides: a structural transition in its crystal lattice the ordered arrangement of its atoms from tetragonal to orthorhombic. In a tetragonal crystal the atoms are arranged like cubes that have been stretched in one direction. An orthorhombic structure is shaped like a brick.

Sodium-iron-arsenic pnictide crystals are known to be tetragonal until cooled to a transition temperature that forces the lattice to become orthorhombic a step toward superconductivity that appears at lower temperatures. But the Rice researchers were surprised to see anomalous orthorhombic regions well above that structural transition temperature. This occurred in samples that were minimally doped with nickel and persisted when the materials were over-doped, they reported.

“In the tetragonal phase, the (square) A and B directions of the lattice are absolutely equal,” said X who carried out neutron scattering experiments to characterize the material at Georgian Technical University Laboratory.

“When you cool it down, it initially becomes orthorhombic, meaning the lattice spontaneously collapses in one axis and yet there’s still no magnetic order. We found that by very precisely measuring this lattice parameter and its temperature dependence distortion we were able to tell how the lattice changes as a function of temperature in the paramagnetic tetragonal regime”.

They were surprised to see pockets of a superconducting nematic phase skewing the lattice towards the orthorhombic form even above the first transition.

“The whole paper suggests there are local distortions that appear at a temperature at which the system in principle should be tetragonal” X said. “These local distortions not only change as a function of temperature but actually ‘know’ about superconductivity. Then their temperature dependence changes at optimum superconductivity which suggests the system has a nematic quantum critical point when local nematic phases are suppressed.

“Basically it tells you this nematic order is competing with superconductivity itself” he said. “But then it suggests the nematic fluctuation may also help superconductivity because it changes temperature dependence around optimum doping”.

Being able to manipulate that point of optimum doping may give researchers better ability to design materials with novel and predictable properties.

“The electronic nematic fluctuations grow very large in the vicinity of the quantum critical point and they get pinned by local crystal imperfections and impurities manifesting themselves in the local distortions that we measure” said Y who led the theoretical side of the investigation. “The most intriguing aspect is that superconductivity is strongest when this happens suggesting that these nematic fluctuations are instrumental in its formation”.